Le besoin => enregistrement de réunion => Récupérer un transcript => faire une synthèse (MAIA / BPCE ou Chat GPT ou Gemini ou …)

- Différentes solutions :

- Passer par de la reconnaissance vocale en ligne (Ps confidentialité) => Open AI et Google ont des API pour cela (Token requis?)

- Passer par la reconnaissance en local => Installer OpenAI Whisper

- Install OPEN AI WISPER sous Linux (ce serveur)

https://openai.com/index/whisper/

Set up Your Environment

For this demonstration, I’m running Ubuntu under WSL in Windows. The instructions for setting it up in Ubuntu proper are the same. I have yet to try this on a Mac, but I will.

The first thing you do, of course, is update the system.

sudo apt update

sudo apt upgrade

Now, you will need some base packages installed on the system for this to work. Mainly FFmpeg, which can be installed with this:

sudo apt install ffmpeg

You should be good to go. Let’s create a Python environment:

mkdir whispertest && cd whispertest

python3 -m venv whispertest

source whispertest/bin/activate

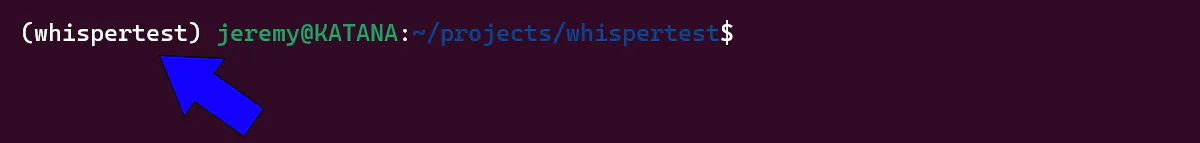

Remember, you should see the environment name to the left of your prompt:

Then, we’ll need to install the Rust setup tools:

pip install setuptools-rust

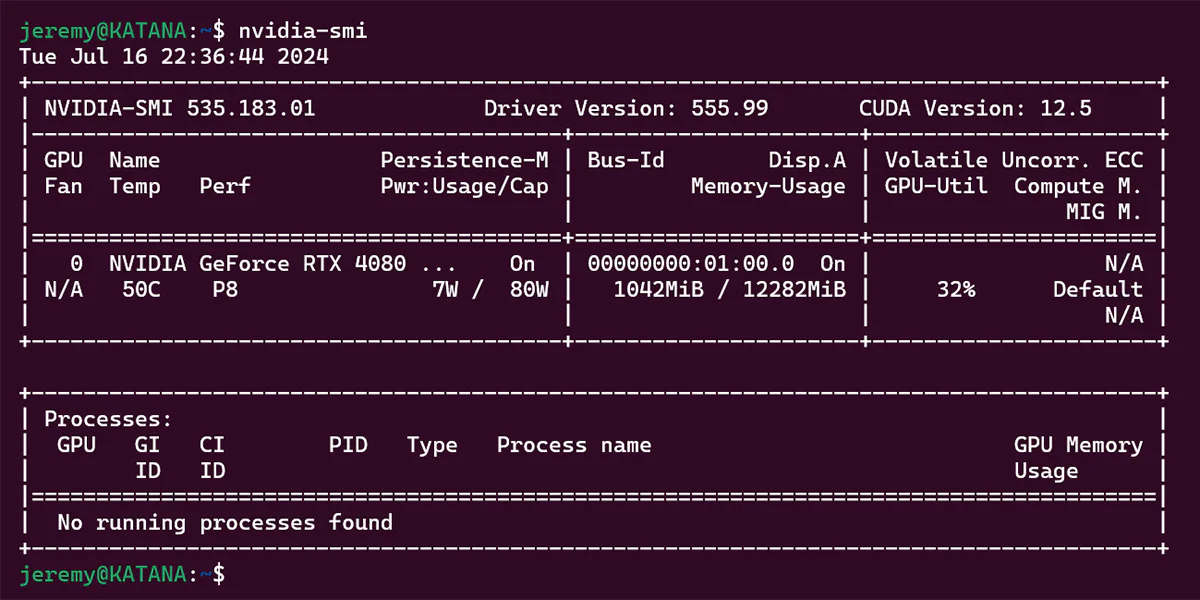

Note: If you have an NVidia GPU

If you have an NVIDIA GPU, you must install the NVIDIA drivers for this to work properly.

You can verify they’re installed correctly by typing:

nvidia-smi

And you should see something like this:

Install Whisper

Whisper runs as an executable within your Python environment. It’s pretty cool.

The best way to install it is:

pip install -U openai-whisper

But you can also pull the latest version straight from the repository if you like:

pip install git+https://github.com/openai/whisper.git

Either way, it will install a bunch of packages, so go get some ice water. When it’s done, the whisper executable will be installed.

I recorded a sample file, and here’s how we can run it.

whisper [audio.flac audio.mp3 audio.wav] --model [model size]

I will start with the tiny model just to see how it performs. Here’s a list of available models

| Size | Parameters | English-only model | Multilingual model | Required VRAM | Relative speed |

|---|---|---|---|---|---|

| tiny | 39 M | tiny.en |

tiny |

~1 GB | ~32x |

| base | 74 M | base.en |

base |

~1 GB | ~16x |

| small | 244 M | small.en |

small |

~2 GB | ~6x |

| medium | 769 M | medium.en |

medium |

~5 GB | ~2x |

| large | 1550 M | N/A | large |

~10 GB | 1x |

I’ll start with the smallest model and see its accuracy, then work my way up if needed.

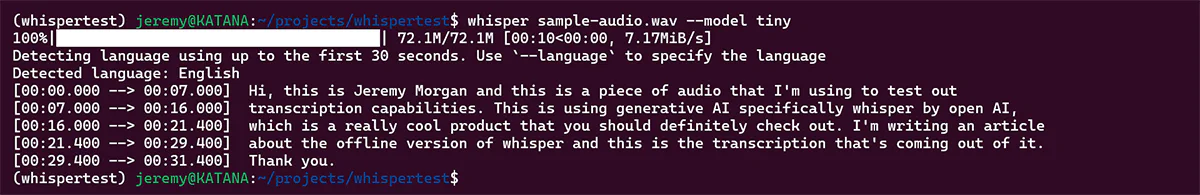

Here’s the command I ran to parse and extract from my sample file:

whisper sample-audio.wav --model tiny

And lucky for me, it was transcribed perfectly:

Your results will vary. If you don’t like the output you can always step it up to a larger model, which will take more memory and a longer amount of time.

So, what else can you do with this tool?

Building a Cool Python Script

The Whisper service has a bunch of cool features that I don’t use, like translation! But what if we want to script this stuff, like processing 100 audio files or something? Building a Python script to run it is easy.

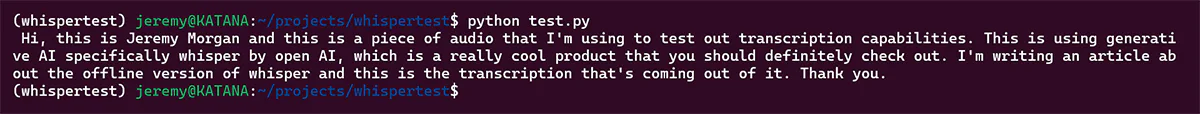

Here’s a script straight from the GitHub page:

import whisper

model = whisper.load_model("base")

result = model.transcribe("audio.mp3")

print(result["text"])

And when I run it, it shows clean text output.

You can of course, write this to its own text file:

with open("output.txt", "w") as file:

file.write(result["text"])

There are tons of options available. It also does transcriptions in other languages as well.